You’ll need backside-illuminated sensors with noise floor reduction and multi-spectral fusion combining visible, infrared, and radar modalities to detect targets below 32×32 pixels. Advanced feature extraction using truncated path signatures and WideResNet-28-2 architectures maintains AUC >0.998 even at 50-150% contrast thresholds, while hardware-in-the-loop testing validates real-time inference timing under actual electrical noise conditions. Quantum dot photodetectors and computational phase synchronization create virtual apertures exceeding physical sensor dimensions, extending your detection range through systematic calibration and atmospheric correction protocols that address the fundamental resolution-versus-range trade-offs limiting current systems.

Key Takeaways

- Advanced backside-illuminated sensors reduce noise floors and optimize light absorption pathways to capture weak signatures from small, distant targets.

- Quantum materials like quantum dot photodetectors and graphene enhance infrared sensitivity while reducing cooling requirements for system simplification.

- Multi-spectral fusion combines visible, infrared, and radar data through cross-modal feature enhancement to improve detection in challenging environments.

- Computational aperture technologies use phase synchronization and iterative calibration to create virtual apertures exceeding physical sensor size limitations.

- Feature extraction techniques maximize information from minimal pixel data by combining texture, frequency, and spatial features into unified architectures.

Challenges in Acquiring Diminutive Objects at Extended Ranges

When detector networks process small objects at extended ranges, they encounter fundamental information bottlenecks that degrade performance across multiple dimensions.

You’ll find EfficientDet achieves only 12% mAP for small objects versus 51% for large ones—a 4× performance gap. Low-resolution inputs lose critical detail, rendering targets indistinguishable from noise. Objects shrink below predefined anchor regions at distance, causing scale mismatches between training and deployment.

Sensor calibration errors compound these issues, introducing systematic localization drift. Terrain masking creates partial occlusions that hide already-sparse features. Your detector faces rapid sub-pixel motion exceeding 1 pixel/frame, straining temporal tracking. Background subtraction techniques struggle with adaptive modeling when small objects appear stationary relative to dynamic scene elements. Background clutter generates false positives when limited spatial context prevents discrimination. Minimal pixel representation restricts the visual detail available for classification, forcing networks to make predictions from severely degraded feature maps.

These constraints force you to balance resolution costs against detection capability—a trade-off that fundamentally limits system autonomy.

Advanced Sensor Technologies for Enhanced Detection Capabilities

You’ll achieve significant detection gains by integrating multi-spectral sensors that combine visible, infrared, and radar modalities into unified acquisition systems.

Infrared sensors extend your operational range through MWIR imaging for long-distance surveillance and SWIR capability that penetrates obscurants while differentiating natural from synthetic materials.

High-definition aperture systems, when paired with feature pyramid networks and attention mechanisms, boost your small target detection accuracy from 85% to 92% while maintaining 12 fps processing speeds for real-time applications. In-sensor AI computation during photodetection eliminates traditional data-processing bottlenecks by performing spectral analysis directly at the point of image capture. Hardware-in-the-loop test environments enable you to validate automatic target recognition performance by simulating synthetic infrared and radar signatures that trigger realistic system responses under controlled conditions.

Multi-Spectral Imaging Sensor Integration

Multi-spectral imaging sensor integration extends detection capabilities by capturing 5 to 15 or more spectral bands across the electromagnetic spectrum, far exceeding the three channels of conventional RGB systems.

You’ll achieve spectral resolution spanning 400-700 nm through chip-scale CMOS sensors with integrated Fabry-Pérot interference filters, eliminating bulky external optics while maintaining color calibration precision.

Three integration approaches optimize your deployment:

- Single-sensor mosaic patterns deliver compact form factors adaptable to mobile platforms without additional optical elements.

- Filter wheel systems provide cost-effective sequential imaging for stationary applications requiring detailed material analysis. Traditional mechanical systems capture images sequentially for each spectral band, making them bulky and costly.

- Camera arrays enable simultaneous multi-band capture, fusing wavelength-specific data for real-time target discrimination.

These configurations transform subtle spectral signatures into actionable intelligence, revealing composition details and hidden patterns conventional imaging can’t detect—empowering independent monitoring without proprietary system constraints. Each object’s unique spectral signature reflects, emits, or absorbs specific wavelengths, enabling precise material differentiation beyond surface-visible features.

Infrared Detection Range Improvements

Advanced infrared sensor technologies extend detection ranges through quantum materials that fundamentally alter photodetector performance parameters. Quantum dot infrared photodetectors leverage quantum coherence effects to reduce dark current while maintaining high carrier mobility, delivering measurable sensitivity improvements across mid-wave and long-wave infrared spectrums.

You’ll find graphene and *progression* metal dichalcogenides provide superior detection performance compared to conventional materials, with reduced cooling requirements that minimize system complexity. Radiation-hardened detector arrays enable space-based platforms to track hypersonic threats across extended ranges without material fatigue degradation.

Multi-megapixel focal plane arrays utilizing GeoSnap architecture eliminate motion blur through snapshot readout modes, ensuring precision tracking capabilities. Photon detectors generate electrical signals through photon interaction with charge carriers, enabling precise differentiation of thermal signatures from small targets at extended ranges. Modern systems achieve ultra-high infrared resolution up to 1200 × 600 pixels for enhanced small target discrimination. These quantum material advancements fundamentally expand your operational envelope for small target identification while reducing logistical constraints inherent in traditional cooled detector systems.

High-Definition Aperture Technology Advances

Computational phase synchronization transforms optical synthetic aperture systems from laboratory demonstrations into deployable sensor platforms by eliminating rigid interferometric alignment constraints.

You’ll achieve sub-micron resolution through software-based phase coherence rather than mechanical precision, giving you unprecedented freedom to configure multi-sensor arrays without bulky interferometric frameworks.

Key Performance Capabilities:

- Aperture calibration through iterative algorithms maximizes coherent energy across distributed sensors, replacing hardware-dependent synchronization.

- Complex wavefield recovery and numerical propagation enables virtual apertures exceeding any single sensor’s physical dimensions.

- Digital phase offset adjustment decouples measurement from physical constraints, allowing flexible deployment configurations.

The system captures raw diffraction patterns at each sensor location, containing both amplitude and phase information that traditional lens-based systems cannot preserve.

Combined with MEMS-based variable aperture technology and circular aperture configurations, you’ll eliminate hexagonal distortions while maintaining precise exposure control across challenging operational environments without traditional mechanical limitations.

Advanced backside-illuminated sensor architectures further enhance signal capture by optimizing light absorption pathways, reducing noise floor levels critical for detecting weak signatures from small distant targets.

Machine Learning Algorithms for Pattern Recognition of Minimal Signatures

When you’re detecting small targets with minimal signatures, you’ll need feature extraction methods that maximize information from limited pixel data while maintaining computational efficiency.

Your classification pipeline must handle low-contrast scenarios through dimensionality reduction techniques—such as truncated signature transforms yielding 57-dimensional feature sets from order-2 expansions—that preserve discriminative power despite sparse input signals.

You can achieve 75%+ accuracy by combining texture-based features (GLCM), frequency-domain transforms (FFT), and appearance descriptors (LDA) into fusion architectures that outperform single-modality approaches on constrained signature datasets.

Small Signature Feature Extraction

Detecting minimal signatures in noisy environments demands feature extraction algorithms that maintain discrimination power despite reduced signal amplitudes and limited spatial extent.

You’ll need specialized methods that preserve temporal ordering while managing signature complexity inherent in reduced-scale patterns.

Critical extraction approaches for small signatures:

- Truncated path signatures generate feature vectors with dimensions calculated by \( rac{d^{N+1}-1}{d-1} \), where you’ll select truncation level N based on available signal resolution.

- Landmark-based extrema matching identifies curvature points through pen stroke analysis, isolating representative characteristics even in compressed spatial domains.

- Grid-based spatial coding extracts binary features by analyzing pixel distributions along axes, maintaining invariance despite scaling constraints.

Principal Component Analysis transforms these extracted features into uncorrelated components, reducing dimensionality while preserving discrimination power essential for classification algorithms.

Low-Contrast Target Classification

How do machine learning algorithms maintain classification accuracy when target signatures occupy minimal pixel space and exhibit contrast levels barely exceeding background noise?

You’ll find WideResNet-28-2 architectures process low-contrast patches through strategic preprocessing workflows that preserve subtle intensity variations.

Three CNN approaches—RD, FT, and STN—demonstrate robust performance when evaluated across contrast variations at 50%, 75%, 125%, and 150% thresholds, maintaining AUC metrics above 0.998 even under degraded conditions.

Your system’s sensor calibration directly influences feature extraction quality, particularly when targets occupy fewer than 32×32 pixels.

Wavelet scattering features combined with SVM quadratic kernels achieve 100% cross-validation accuracy on synthetic data augmented through controlled contrast manipulation.

These networks reject non-target detections while preserving sensitivity to authentic signatures, quantified through confusion matrix analysis across 11 distinct classes.

Multi-Spectral Imaging Solutions for Low-Contrast Environments

Although traditional RGB sensors excel in well-lit conditions, they fail catastrophically when visible light diminishes below operational thresholds in night and fog scenarios.

You’ll need multispectral imaging solutions that liberate your detection capabilities from environmental constraints. Spectral calibration and atmospheric correction enable precise fusion of visible and infrared data streams, creating robust target representations regardless of lighting conditions.

Three-stage fusion strategy delivers operational superiority:

- Cross-modal feature enhancement filters and amplifies complementary information from each spectral band.

- Mask Enhanced Pixel-level Fusion (MEPF) highlights small target semantics through intelligent multimodal integration.

- Cyclic overlay processing systematically eliminates sensor noise and spatial distortions.

This end-to-end architecture maintains linear computational complexity while achieving state-of-the-art detection performance in extreme environments where conventional systems become ineffective.

Automation Systems Reducing Target Acquisition Time

Spectral fusion architectures provide the detection foundation, but operational effectiveness depends on minimizing the temporal gap between sensor contact and engagement authorization. You’ll achieve order-of-magnitude reductions in target acquisition time through automated preprocessing and parallel feature extraction methodologies.

Sensor calibration assures consistent performance across deployments, eliminating operator-dependent variability that introduces delays. Your system processes candidate regions through standardized algorithms while maintaining data anonymization protocols for operational security.

Aided/automatic target recognition transmits only relevant intelligence, overcoming bandwidth constraints that plague remote platforms. You’re no longer bound by sequential processing workflows—concurrent execution of diagnostics, analysis, and validation occurs within single operational cycles.

Real-time feedback loops enable proactive engagement decisions rather than reactive responses. This temporal advantage transforms wide-area search capabilities, providing actionable alerts across expanded fields of regard without human-induced latency.

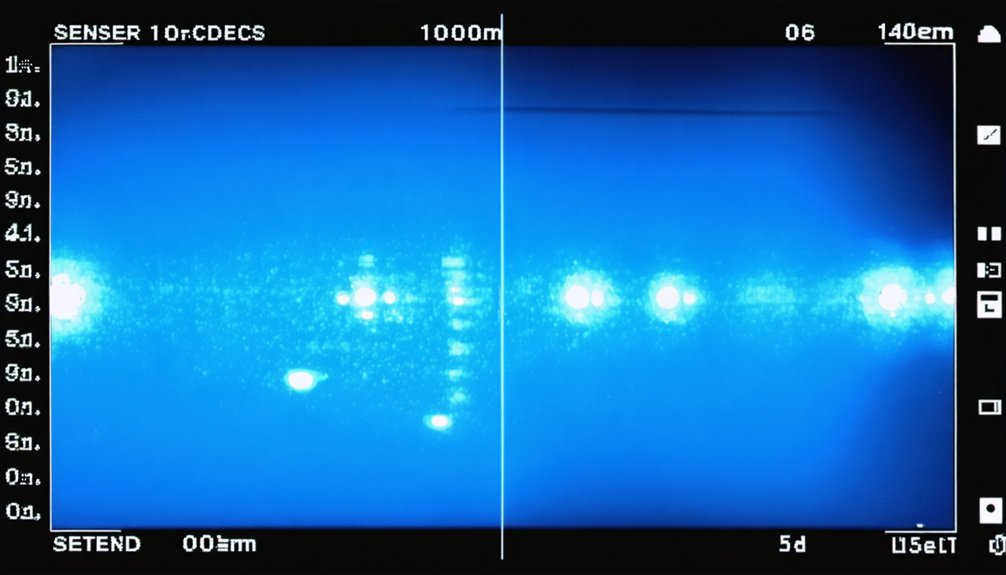

Hardware-in-the-Loop Testing for Small Object Recognition

When detection algorithms shift from simulation to operational hardware, you’ll verify performance through hardware-in-the-loop architectures that expose timing constraints and signal-level anomalies invisible in pure software environments.

Your embedded controllers process simulated sensor feeds in real-time, revealing CAN bus latency and ADC sampling jitter that degrades small target recognition. Real-time solvers operating at microsecond-level accuracy ensure deterministic execution across detection pipelines.

Hardware-in-the-loop testing exposes real-time timing constraints and signal-level degradation that pure simulation cannot reveal in embedded detection systems.

Critical validation elements include:

- AI diagnostics integration – Monitor neural network inference timing and accuracy degradation under actual hardware constraints

- Power management profiling – Measure detection algorithm performance during voltage fluctuations and thermal throttling conditions

- Signal fidelity testing – Verify small object detection sensitivity against electrical noise and timing variations from production components

This pre-commissioning approach eliminates costly field failures while maintaining autonomous development cycles.

Real-Time Processing Requirements for Mobile Target Engagement

How rapidly must your processing pipeline respond when mobile platforms track dynamic targets at engagement velocities? You’ll need sub-100ms latency budgets to maintain tracking locks on small, fast-moving objects. Edge analytics architectures process sensor data locally, eliminating round-trip delays to centralized servers that compromise engagement windows.

Your system must handle 30-60 fps input streams while executing detection algorithms, classification models, and trajectory predictions simultaneously.

Mobile bandwidth constraints demand intelligent data compression—transmit only actionable intelligence, not raw telemetry. Implement microservices that distribute computational loads across available processing nodes, ensuring no single bottleneck degrades performance.

Define hard real-time requirements during your technical analysis phase: what latency thresholds enable successful intercepts? Deploy progressive filtering stages that reject false positives early, preserving computational resources for genuine threats requiring immediate decision-making authority.

Frequently Asked Questions

What Minimum Target Size Can Current Systems Reliably Detect at Maximum Range?

You’d think maximum range means maximum capability, but it doesn’t. Current systems reliably detect 1 m² targets at 70-100 km, requiring precise sensor calibration and target resolution. Smaller objects demand you sacrifice range for detection probability.

How Does Weather Affect Small Target Detection Accuracy in Combat Scenarios?

Weather variability degrades your detection accuracy by reducing signal-to-clutter ratios through atmospheric interference. You’ll need adaptive sensor calibration to maintain performance, as rain, fog, and thermal gradients can decrease small target recognition rates by 40-60% in combat scenarios.

What Is the Cost Difference Between Basic and Advanced Detection Systems?

You’ll find basic detection systems cost $100-$500 upfront with minimal maintenance, while advanced systems require $1,500+ initially plus 15-20% annual upkeep. This cost comparison directly impacts your system scalability choices and operational independence.

Can Existing Platforms Be Upgraded or Must New Hardware Be Purchased?

You can upgrade existing platforms through sensor calibration adjustments and signal processing optimization without purchasing new hardware. Software parameter modifications, algorithm enhancements, and operational reconfiguration deliver measurable sensitivity improvements—typically 3-7x gains—while preserving your current system investment.

How Do Allied and Adversary Detection Capabilities Currently Compare?

You’ll find Allied systems lead in AI-enhanced sensor fusion with 60% better accuracy, while adversaries counter through decoy strategies and signal jamming. Quantum sensing promises 100x sensitivity gains, though hypersonic speeds and swarm coordination challenge your detection networks.

References

- https://www.youtube.com/watch?v=KGVgwirplNI

- https://covar.com/case-study/atlas/

- https://www.northropgrumman.com/what-we-do/mission-solutions/electro-optical-and-infrared-sensors-eo-ir/litening-advanced-targeting-pod

- https://armysbir.army.mil/topics/threat-target-sensor-stimulation-technology/

- https://www.flysight.it/automatic-target-recognition-for-military-use-whats-the-potential/

- https://www.draper.com/media-center/featured-stories/detail/27285/finding-novel-targets-on-the-fly-using-advanced-ai-to-make-flexible-automatic-target-recognition-systems

- https://www.baesystems.com/en-us/product/target-detection-and-recognition

- https://www.gtri.gatech.edu/newsroom/advanced-radar-threat-system-helps-aircrews-train-evade-enemy-missiles

- https://www.spiedigitallibrary.org/topic/sensing-imaging/radar/target-detection

- https://pmc.ncbi.nlm.nih.gov/articles/PMC10422231/