You’ll detect titanium most effectively by running three rapid tests: first, confirm zero attraction to a neodymium magnet since titanium’s paramagnetic with χ ≈ +1.25×10⁻⁴; second, calculate density between 4.43–4.51 g/cm³ using water displacement; third, perform spark testing to observe distinctive bright white sparks with starburst patterns. For field verification, handheld XRF analyzers deliver 99.5+% accuracy in 2–3 seconds, while laboratory ICP-OES provides parts-per-million precision for alloying elements. The all-encompassing methodology below explains advanced techniques and environmental considerations affecting measurement reliability.

Key Takeaways

- Titanium shows no magnetic attraction to neodymium magnets, quickly eliminating ferrous metals from consideration during field identification.

- Density testing confirms titanium at 4.51 g/cm³, approximately 60% lighter than steel, measurable via water displacement methods.

- Spark testing produces distinctive bright white sparks with starburst patterns, contrasting with steel’s orange sparks for visual verification.

- Handheld XRF analyzers identify titanium and alloying elements in 2–3 seconds with over 99.5% accuracy non-destructively.

- Surface preparation is critical: remove paint, oxides, and contaminants before testing to prevent false readings and ensure accurate results.

Quick Physical Tests You Can Perform Anywhere

How can you verify titanium’s authenticity without accessing a laboratory? You’ll find magnet testing provides your fastest screening method—titanium won’t attract to standard magnets, immediately ruling out ferrous imposters.

Visual inspection reveals distinctive surface characteristics and low-density appearance that differentiate titanium from common metals. You can perform comparative weight assessments by recognizing titanium’s 4.5 g/cm³ density, which positions it between aluminum (2.7 g/cm³) and steel (7.8 g/cm³).

Titanium’s moderate density of 4.5 g/cm³ serves as a reliable identification marker, falling distinctly between aluminum and steel.

For chemical verification, expose samples to dilute acids; titanium’s protective oxide layer resists corrosion that degrades standard metals. When dissolving titanium samples, you can use sulfuric, hydrochloric, or fluoboric acid followed by hydrogen peroxide oxidation for more definitive analysis.

Spark testing offers workshop-level identification when you’ve got grinding equipment available. The spark pattern characteristics from grinding titanium produce bright white sparks with distinct bursts that differ markedly from steel’s longer, branching orange sparks. These field-deployable methods deliver immediate feedback without specialized instrumentation, though you’ll want confirmatory testing for conclusive results.

Combine multiple techniques to strengthen identification confidence before committing to material specifications.

Understanding Density and Weight Measurements

Three fundamental density values define titanium’s physical identity: pure titanium stabilizes at 4.51 g/cm³, the widely-used Ti-6Al-4V alloy registers at 4.43 g/cm³, and specialized alloys range from 4.32 to 6.0 g/cm³ depending on alloying elements.

Density calculations empower you to verify authenticity independently. You’ll find titanium 60% less dense than steel (8 g/cm³) and half the weight of copper (8.94 g/cm³). Titanium’s density remains significantly lower than brass, which measures at 8.54 g/cm³. As temperature increases from 20°C to 882.5°C, expect density to decrease from 4.51 to approximately 4.35 g/cm³ due to atomic thermal expansion.

For weight estimation, convert 4.51 g/cm³ to 0.163 lb/in³ or 281.1 lb/ft³.

Three proven measurement methods:

- Water displacement – Submerge irregular pieces, measure volume change

- Direct calculation – Weigh regular shapes, measure dimensions, divide mass by volume

- Pycnometer testing – Determine displaced liquid mass for precise verification

These techniques require no specialized equipment, granting complete measurement autonomy.

Magnetic Properties as a Screening Tool

You can exploit titanium’s paramagnetic properties (χ ≈ +1.25×10⁻⁴) to rapidly differentiate it from ferromagnetic metals during field identification.

When you apply a strong neodymium magnet to pure titanium, you’ll observe zero detectable attraction, immediately eliminating steel, iron, and nickel-based alloys from consideration.

This non-magnetic screening test provides a definitive negative result within seconds, preventing misidentification of common structural metals that exhibit strong ferromagnetic responses.

However, certain titanium alloys containing ferromagnetic elements like iron may display trace ferromagnetic behavior, though this response remains significantly weaker than that of pure ferromagnetic metals.

For precise verification, advanced instruments like Vibrating Sample Magnetometers can measure titanium’s weak diamagnetic susceptibility and confirm material identity beyond visual field tests.

Non-Magnetic Nature of Titanium

When differentiating titanium from other metals, its non-magnetic behavior provides a rapid, non-destructive screening method that eliminates ferrous materials from consideration.

You’ll find titanium’s diamagnetic properties remain consistent across most commercial grades, with magnetic permeability values barely exceeding unity (1.00005-1.0001). This characteristic enables autonomous verification without specialized equipment—simply observe zero attraction when exposed to permanent magnets.

Critical Screening Parameters:

- Alloy composition verification: Monitor for iron contamination exceeding 0.5%, which introduces weak ferromagnetic responses.

- Magnetic shielding applications: Exploit titanium’s impermeability to magnetic flux for sensitive instrumentation housings.

- Field retention testing: Confirm absence of residual magnetization post-exposure, distinguishing titanium from ferromagnetic alternatives.

You’re empowered to independently authenticate materials through simple magnetic testing, bypassing restrictive laboratory dependencies while maintaining methodological accuracy. Titanium’s crystalline structure with no unpaired electrons fundamentally prevents it from interacting with magnetic fields, ensuring reliable non-magnetic performance in sensitive aerospace and medical applications. Compare titanium’s density of ~4.5 g/cm³ against steel’s 7.75-8.05 g/cm³ to further confirm material identification through physical measurements.

Eliminating False Metal Identifications

While titanium’s non-magnetic behavior serves as a valuable identification marker, magnetic testing alone introduces significant error potential that compromises material verification accuracy. You’ll need systematic protocols combining multiple methods.

Start with magnet screening to eliminate ferromagnetic metals, then verify through density measurements and spark testing—titanium produces distinctive white-yellow streams with starburst patterns.

Advanced techniques like X-ray Fluorescence provide definitive elemental composition without magnetic bias. Eddy Current Testing detects surface anomalies while Ultrasonic Testing reveals internal property variations.

For aerospace applications requiring absolute certainty, acoustic testing validates structural integrity alongside radiation detection capabilities that confirm titanium’s specific density profile. Quantum Monte Carlo simulations have enabled precise calculations of complex electron interactions in titanium oxide derivatives, demonstrating how computational methods can resolve magnetic property uncertainties that affect material identification.

Don’t rely solely on magnetometer readings below 1×10⁻⁶ cgs units; environmental factors including temperature fluctuations decrease susceptibility by 2% per 10°C, demanding controlled testing conditions and exhaustive documentation protocols. Precleaning samples removes ferromagnetic contamination such as iron filings deposited during machining processes that generate false positive magnetic responses.

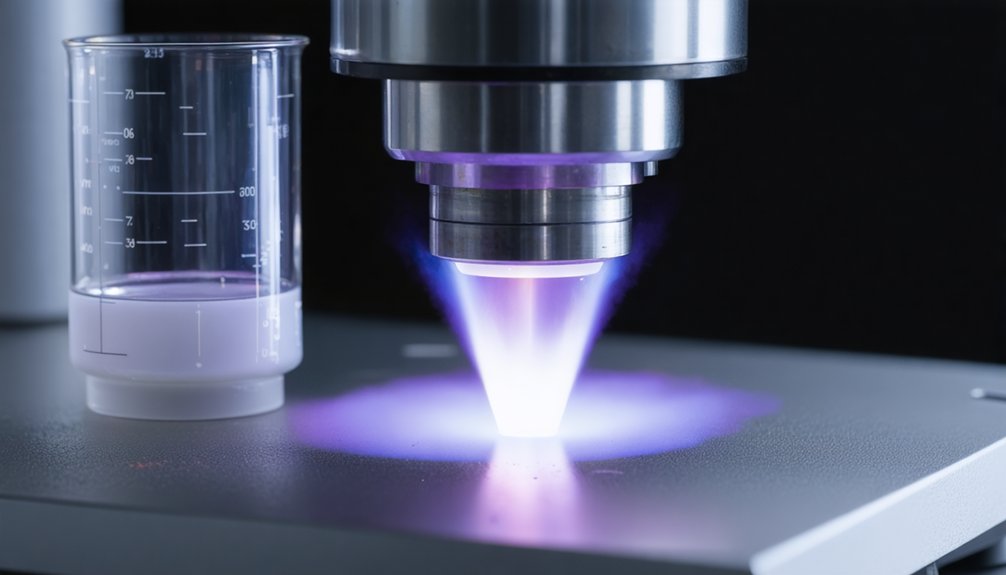

Handheld XRF Analyzers for Field Testing

Handheld XRF analyzers deliver rapid titanium identification in 2-3 seconds while achieving R² values above 0.85 correlation with laboratory results.

You’ll verify material compliance against industry specifications through nondestructive elemental analysis that detects titanium alongside its common alloying elements from magnesium through uranium.

Ideal detection accuracy requires Silicon Drift Detector (SDD) technology operating under controlled conditions, as titanium’s mid-range atomic weight responds reliably to standard atmospheric testing protocols without specialized vacuum or helium environments.

Speed and Accuracy Benefits

How quickly can you verify alloy composition in the field while maintaining laboratory-grade accuracy? Modern handheld XRF technology delivers both speed and precision through advanced portable spectroscopy. You’ll achieve 2-second alloy tests with 99.5+% measurement accuracy, eliminating bottlenecks in your quality control workflow.

Key Performance Metrics:

- Elemental detection capabilities span from Magnesium to Uranium, with titanium tramp elements detected below 100 ppm for precise grade matching.

- Analysis speeds reach 3x faster performance on phosphorus and sulfur compared to competing systems, completing tests in 2-6 seconds.

- Sensitivity improvements of 5-50x for most elements enable confident field decisions without laboratory verification delays.

Your portable spectroscopy system maintains consistent speed over time, never degrading performance while delivering laboratory-equivalent results on-site.

Industry Compliance Verification Process

When titanium components enter your production workflow, you’ll need verifiable compliance documentation that matches regulatory requirements across aerospace, medical, and industrial sectors. Handheld XRF delivers non-destructive verification in 2-10 seconds, confirming ASTM B381 and ASME SB-381 specifications without compromising material integrity. You’ll achieve R² correlation exceeding 0.99 between measured and certified values, establishing traceability that NADCAP and ISO 9001 auditors demand.

Your verification protocol starts with surface preparation—grinding away corrosion and solvent cleaning ensure accurate readings. XRF complements electrochemical testing and thermal conductivity measurements, creating exhaustive quality assurance.

Document every scan through production testing plans that specify sampling frequency, acceptable limits, and corrective actions. This systematic approach recovers lost traceability, screens RoHS-restricted substances, and validates Ti-Zr conversion coatings, empowering autonomous quality decisions.

Optimal Operating Conditions Required

Because titanium’s chemical signature responds predictably to specific X-ray energies, you’ll need tube voltages between 40-50 kilovolts to excite characteristic fluorescence from Ti K-alpha lines at 4.51 keV. Operating parameters demand precision: higher voltages improve detection limits, while standard 40-45 kV configurations balance sensitivity with equipment specifications.

Surface preparation critically determines accuracy. Remove these contaminants before analysis:

- Paint, sealers, and galvanization layers – Block X-ray penetration to bulk material

- Residue from mechanical surface treatments – Sandblasting, shot blasting, or grinding debris skews composition data

- Dirt and foreign matter – Dramatically alters measured elemental ratios

Your analyzer measures less than 100 microns into ferrous materials, making surface cleanliness non-negotiable.

Maintain sample temperatures below 100°C for standard detector operation.

Clean surfaces ensure XRF penetration accurately reflects bulk titanium composition.

Professional Laboratory Spectroscopy Techniques

While titanium’s exceptional properties make it invaluable across aerospace, medical, and industrial applications, accurate compositional analysis requires sophisticated spectroscopic methods that can detect both major alloying elements and trace impurities at parts-per-million levels.

You’ll achieve ideal results through spark-AES following ASTM E2994-21, which provides highly accurate impurity profiling across titanium alloys. For spectroscopic calibration spanning 0.004-50.0% tin concentrations, fluorescent X-ray spectroscopy delivers reproducible intensity measurements using 50 mA at 60 kV X-ray tubes with helium-path goniometers.

When you need ultra-trace sensitivity, glow discharge mass spectrometry detects depth-specific elemental distributions while maintaining robust performance across diverse sample matrices.

These complementary techniques grant you complete analytical freedom, ensuring purity certification and thorough compositional characterization without compromise.

Detecting Impurities With ICP Analysis

You’ll achieve thorough impurity profiling in titanium matrices using ICP-OES multi-element detection, which simultaneously quantifies 26 elements including Be, Na, Mg, Al, V, Cr, Mn, Fe, Co, Ni, Cu, Zn, and heavy metals in a single analysis.

The technique delivers detection limits ranging from 0.004 to 0.63 µg/g with recovery ratios between 87.6% and 106.4%, ensuring measurement accuracy that meets ISO/ASTM standards.

You’ll need to establish matrix-matched calibration standards and employ collision-reaction cells with helium gas at 4 mL/min to eliminate polyatomic interferences, particularly the 40Ar16O+ interference affecting 56Fe detection.

ICP-OES Multi-Element Detection

ICP-OES (Inductively Coupled Plasma-Optical Emission Spectrometry) employs argon plasma at temperatures exceeding 10,000 K to excite atoms in your titanium sample, causing them to emit light at element-specific wavelengths that enable simultaneous multi-element quantification.

You’ll detect major alloying elements (Al, V) alongside critical impurities (Ni, Cr, Fe, Mg, Na) in a single analytical run.

Core analytical parameters you must optimize:

- Spectroscopic calibration requires matrix-matched standards containing Ti, Al, V, and Zr in acid solutions to compensate for spectral interferences from titanium’s complex emission profile.

- Sample digestion through microwave-assisted acid dissolution ensures complete matrix breakdown, achieving 97–110% recoveries with RSD values between 0.3–3.1%.

- Automated dilution systems manage high total dissolved solids, maintaining plasma stability while scandium internal standards correct for matrix effects and instrument drift.

Trace Impurity Sensitivity Levels

Beyond multi-element screening capabilities, ICP-based techniques achieve detection limits that span four orders of magnitude depending on your instrumental configuration and matrix complexity.

HR-ICP-MS delivers 0.004-0.63 μg/g sensitivity for 26 elements in high-purity titanium dioxide, while tandem ICP-MS reaches sub-ng/L (ppt) levels for titanium isotopes through MS/MS mode interference removal.

Elemental Spectroscopy advances further with ICP-QQQ systems using NH3 reaction chemistry, yielding background equivalent concentrations four times lower than helium collision mode for copper in 500 ppm titanium matrices.

GDMS provides industry-leading Detection Technology for trace impurities in titanium alloys, supporting ASTM certification protocols.

Your choice between techniques depends on required sensitivity thresholds: ICP-OES for routine screening, ICP-MS for ultra-trace quantification, and GDMS for all-encompassing purity certification.

Microscopic Examination Methods

Your preparation workflow determines analysis quality:

- Chemical etching: Apply 1% hydrofluoric acid to expose grain boundaries, or use hydrogen peroxide-colloidal silica mixtures (10mL H₂O₂ at 30% with 50mL colloidal silica) for attack polishing.

- SEM integration: Combine surface morphology imaging with energy-dispersive X-ray spectroscopy for compositional mapping.

- Ductility management: Employ specialized cut-off wheels and vibratory polishing to prevent twinning damage during specimen preparation.

Chemical Testing Procedures for Verification

Once you’ve characterized titanium’s microstructure through microscopic examination, quantitative chemical analysis verifies compositional compliance and confirms material identity.

Spark atomic emission spectroscopy delivers precise elemental analysis following GB/T 4698 and ASTM E2994-16 standards. Spectroscopic calibration ensures accurate detection of copper, iron, silicon, manganese, and molybdenum within specified ranges.

Inductively coupled plasma atomic emission spectrometry measures chromium (0.005–4.0%), vanadium (0.01–15.0%), and zirconium (0.003–4.0%) per ASTM E2371-21a. Sample preparation involves acid dissolution using fluoboric and sulfuric acids, followed by colorimetric tests producing characteristic violet or yellow indicators.

Glow discharge mass spectrometry provides full periodic table coverage with minimal preparation, detecting ultra-trace impurities in Ti-6Al-4V.

Lead reduction coupled with iodine titration quantifies tin content (0.03–1.0%) independently, liberating you from reliance on single-method verification.

Comparing Corrosion Resistance Characteristics

While chemical testing confirms titanium’s composition, evaluating its corrosion resistance characteristics reveals why specific grades dominate particular industrial applications. Understanding corrosion mechanisms enables you to select suitable titanium grades without unnecessary protective coatings.

Corrosion resistance data determines optimal titanium grade selection, eliminating protective coating requirements through targeted material specification.

Key Resistance Indicators:

- Oxidizing Acid Performance – Titanium’s passive oxide film surpasses stainless steel in hot nitric acid environments. Commercially pure grades (2, 4, 7, 12) demonstrate superior resistance to metal-contaminated media.

- Chloride Environment Limits – Crevice corrosion defines application boundaries. However, TIMETAL Code-12 and 50A Pd variants significantly extend operational parameters beyond unalloyed titanium.

- Alloy-Specific Variations – TC4 base metal outperforms its welds in sulfuric acid, while TA2 exhibits the opposite behavior. Verification testing is necessary for critical applications.

This data-driven approach eliminates guesswork when specifying titanium components.

Choosing the Right Detection Method for Your Application

Beyond corrosion behavior analysis, detection method selection depends on three primary factors: required accuracy levels, available equipment infrastructure, and sample preparation constraints.

You’ll find ICP-OES delivers 98.77% recovery rates with multi-element capabilities through single preparation steps, while ICPMS achieves detection sensitivities down to 0.5-1.5 μg L⁻¹ for whole blood analysis.

Material Compatibility dictates your digestion protocol—nitric-hydrofluoric acid mixtures dissolve titanium dioxide nanomaterials effectively.

X-ray fluorescence enables non-destructive analysis across 0.004-50.0% concentration ranges.

Spectroscopic Innovations like element-specific spectral line analysis identify aluminum, vanadium, and molybdenum compositions in alloys without sample destruction.

For baseline measurements, standardize preparation across laboratories using identical equipment.

SAE AMS and ASTM standards establish performance-based methodologies ensuring consistent results while maintaining analytical freedom.

Frequently Asked Questions

Can Titanium Be Detected in Recycled Materials Mixed With Other Metals?

Yes, you’ll detect titanium alloy in recycled sources mixed with other metals using XRF and LIBS technologies. These analyzers identify titanium from trace levels to pure metal, distinguishing alloys from contaminants with quantitative analysis in just three seconds.

How Do Temperature Variations Affect Titanium Detection Accuracy in Field Conditions?

Temperature variations alter titanium’s electromagnetic properties and thermal conductivity, reducing detection accuracy above 450°C. You’ll observe oxide layer formation, frequency shifts in resonance testing, and magnetic response changes that complicate field identification without calibrated compensation methods.

What Certifications Are Required to Operate Professional Titanium Detection Equipment?

Picture precise calibration curves plotted against reference standards—you’ll need operator training certificates, NDT Level II credentials for professional detection, and adherence to ASTM/NIST certification standards. Detector calibration against traceable standards like NIST SRM 3162a guarantees your measurement freedom and accuracy.

Are There Mobile Apps That Assist With Titanium Identification Procedures?

Mobile scanning apps can’t directly detect titanium since it’s non-magnetic. However, you’ll find app integration with specialized XRF analyzers and AI-based visual identification tools that provide composition analysis, offering you independent verification without relying on magnetic detection methods.

How Much Does Titanium Testing Cost for Small Businesses or Hobbyists?

You’ll open titanium’s secrets starting at $20 per sample through independent labs. Your cost considerations scale with testing methodologies—basic elemental assays remain affordable, while specialized procedures increase expenses. Volume discounts and preparation requirements considerably impact your budget.

References

- https://www.911metallurgist.com/blog/assaying-analyzing-titanium-metal-tin/

- https://scentroid.com/products/sensor-technology/x-ray-fluorescence-sensor/titanium-detection/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC6120291/

- https://www.eag.com/app-note/trace-analysis-of-titanium/

- https://triplycircletitanium.com/how-to-be-absolutely-sure-that-a-metal-is-really-titanium/

- https://www.youtube.com/watch?v=fubBvLrKbIM

- https://www.tantalum-niobium.com/blog/blog-list931.html

- https://www.laube.com/titanium-quality-control-procedures-and-testing-methods/

- https://www.buehler.com/blog/titanium-specimen-preparation-and-testing/

- https://webshop.struers.com/en/knowledge/materials/titanium/